LCLStream

(LCLS/Oak Ridge)

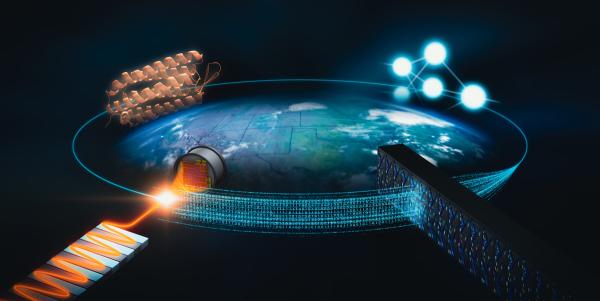

Experiments at X-ray light sources and neutron sources enable the direct observation and characterization of materials and molecular assemblies critical for energy research. Ongoing facility enhancements are exponentially increasing the rate and volume of data collected, opening up new frontiers of scientific research but also necessitating advancements in computing, algorithms and analysis to exploit this data effectively.

As data rates surge, accelerated processing workflows are needed that can mine continuously streamed data to select interesting events, reject poor data, and adapt to changing experimental conditions. Real-time data analysis can offer immediate feedback to users or direct instrument controls for self-driving experiments. Autonomous experiment steering in turn is poised to maximize the efficiency and quality of data collection by connecting the user's intent in collecting data, data analysis results, and algorithms capable of driving intelligent data collection and guiding the instrument to optimal operating regimes.

ILLUMINE will facilitate rapid data analysis and autonomous experiment steering capabilities to support cutting-edge research tightly coupling high-throughput experiments, advanced computing architectures, and novel AI/ML algorithms to significantly reduce the time to optimize instrument configurations, leverage large datasets, and optimize the use of oversubscribed beam times. To deliver these pivotal capabilities — rapid data analysis and autonomous experiment steering — for diverse experiments across the facilities, we will develop algorithms to perform real-time compression and ML inference at the experiment edge and expand on current edge-to-HPC analysis pipelines.

As part of the Integrated Research Infrastructure vision, this project is developing cross facility workflows. We will also create advanced workflow monitoring and decision support systems, including reinforcement learning for data optimization, handling uncertainty, and high-dimensional search algorithms for experiments. Connecting these two elements is the development of a multi-facility framework built on a common interoperability layer for autonomous experiment workflows and built on the widely-used Bluesky data collection platform into an accessible toolbox of reusable off-the-shelf components that can be assembled into tailored workflows that cater to specific scientific needs.

Collectively these advances are poised to unlock the transformative potential of the facility upgrades by delivering rapid analysis and workflow monitoring algorithms built on a common, interoperable framework to ensure their broad transferability across facilities, instruments, and experiments. Ultimately, these capabilities will significantly enhance experimental output and enable groundbreaking scientific exploration.

Facilitating rapid data analysis and autonomous experiment steering capabilities will shed light on some of the most challenging scientific research areas facing the nation including structural biology, materials science, quantum materials, environmental science, nanoscience, nanotechnology, additive manufacturing, and condensed matter physics.

Research Objectives & Milestones

This research proposes the development of a multi-facility framework to address the challenges posed by the growing volume and complexity of data collected at x-ray and neutron sources. By integrating advanced computing, algorithms, and analysis, the framework aims to enable rapid data analysis and autonomous experiment steering.

It will leverage real-time compression, machine learning inference, and decision support techniques to optimize data collection and explore experiment phase space. The framework, built upon the Bluesky data collection platform, will provide accessible and reusable components to enhance the efficiency and quality of experiments, unlocking new scientific possibilities.

Objectives

- Real-time calibration, reduction and analysis at the edge: produce ultrafast calibration software for imaging detectors, capable of operating at 100 kHz; develop Bragg Peak Finder that can operate on uncalibrated images; investigate shallow transformers for data reduction/compression for AI/ML compression on FPGA

- Using remote HPC centers, like OLCF, to develop workflows that connect X-ray and neutron sources to computing for facility applications; develop streaming workflows between x-ray and neutron sources and HPC facilities

- Demonstrate real-time ML model re-training for edge deployment and develop re-training workflows for specific x-ray and neutron source data.

- Development of Workflow monitoring and decision support tools for machine assisted human in the loop, experiment design optimization, optimal measurement design over fixed sample spaces

Highlight Lecture

Revealing the Secrets of Transistors using Supercomputers

By Quynh L. Nguyen